Text and image-based memes are a thing of the past, while voice-based memes are the new favorites on social media, especially on TikTok and YouTube. The use of generative artificial intelligence is producing a growing number of videos featuring politicians such as Joe Biden and Donald Trump, spreading misinformation and conspiracy theories, according to research by the brand safety technology company Zefr.

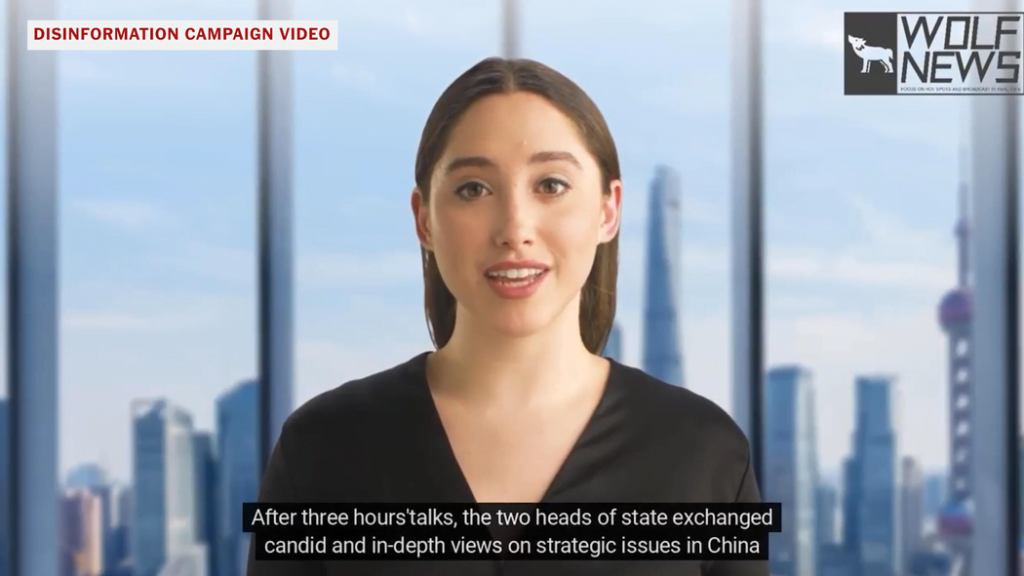

Artificial Intelligence Videos

These AI-generated videos have surpassed the total number of 2022 videos on social media by more than 130%, reaching over 11 million views and 2 million likes. Additionally, over 90% of these videos can be monetized, meaning advertisers can post ads alongside the misleading content.

Photo: Adweek

There is a growing concern among advertisers to combat misinformation, but current moderation tools are not sufficient to properly filter out AI-generated videos.

Spread of Misinformation

Text-to-image tools such as Microsoft’s Vall-E and open-source tools like fakeyou.com make it easy for bad actors to spread misinformation on social media. Furthermore, creators also use ChatGPT to write scripts for these videos.

Current misinformation tools, such as keyword blacklist and static inclusion lists, are not sufficient to detect these deepfakes, which raises real concerns for future elections.

Security Risk

Advertisers can turn to keyword blacklist or context-driven AI tools from third-party verification companies such as DoubleVerify and Integral Ad Science (IAS) to filter out ads that are safe and brand-appropriate outside of social media. Additionally, the industry is becoming more careful in choosing its business partners to avoid junk inventory. However, these tools can also be ineffective on social media, which requires content analysis beyond website domains.

Photo: The New York Times

The use of generative AI to create videos featuring politicians spreading misinformation is definitely a real security risk for brand safety on social media. Current moderation tools are not enough to combat these deepfakes, making it necessary to use more advanced and scalable AI fact-checking tools to ensure brand safety.